Raihan Ur Rasool

Hafiz Farooq Ahmad

Hafiz Farooq Ahmad

Wajid Rafique

Wajid Rafique

Adnan Qayyum

Adnan Qayyum

Junaid Qadir

Junaid Qadir

5 and

Zahid Anwar

Zahid Anwar

College of Engineering and Science, Victoria University, Melbourne, VIC 8001, AustraliaComputer Science Department, College of Computer Sciences and Information Technology (CCSIT), King Faisal University, P.O. Box 400, Al-Ahsa 31982, Saudi Arabia

Department of Electrical and Software Engineering, University of Calgary, Calgary, AB T2N 1N4, Canada

Department of Computer Science, Information Technology University (ITU), Lahore 40050, PakistanDepartment of Computer Science and Engineering, College of Engineering, Qatar University, Doha 2713, Qatar

Department of Computer Science, Sheila and Robert Challey Institute for Global Innovation and Growth, North Dakota State University (NDSU), Fargo, ND 58108, USA

Author to whom correspondence should be addressed. Future Internet 2023, 15(3), 94; https://doi.org/10.3390/fi15030094Submission received: 28 January 2023 / Revised: 23 February 2023 / Accepted: 24 February 2023 / Published: 27 February 2023

In recent years, the interdisciplinary field of quantum computing has rapidly developed and garnered substantial interest from both academia and industry due to its ability to process information in fundamentally different ways, leading to hitherto unattainable computational capabilities. However, despite its potential, the full extent of quantum computing’s impact on healthcare remains largely unexplored. This survey paper presents the first systematic analysis of the various capabilities of quantum computing in enhancing healthcare systems, with a focus on its potential to revolutionize compute-intensive healthcare tasks such as drug discovery, personalized medicine, DNA sequencing, medical imaging, and operational optimization. Through a comprehensive analysis of existing literature, we have developed taxonomies across different dimensions, including background and enabling technologies, applications, requirements, architectures, security, open issues, and future research directions, providing a panoramic view of the quantum computing paradigm for healthcare. Our survey aims to aid both new and experienced researchers in quantum computing and healthcare by helping them understand the current research landscape, identifying potential opportunities and challenges, and making informed decisions when designing new architectures and applications for quantum computing in healthcare.

In recent years, advances in computing technology have made processing large-scale data feasible. Quantum computing (QC) has shown the potential in solving complex tasks much faster than classical computers. Healthcare, in particular, will benefit from QC as the volume and diversity of health data increase exponentially. For instance, during the COVID-19 pandemic, novel virus variants emerged, challenging healthcare professionals who were genome sequencing the virus using traditional computing systems. This highlights the need to explore new ways to speed up healthcare analysis and monitoring efforts to efficiently handle future pandemic situations. QC promises a revolutionary approach to improving healthcare technologies. While previous research has demonstrated how QC can introduce new possibilities for complex healthcare computations, the existing literature on QC for healthcare is largely unstructured, and the existing papers on QC for healthcare that have been proposed only cover a small proportion of disruptive use cases. This research provides the first systematic analysis of QC in healthcare. The following sections introduce QC, its use in healthcare, and our motivation for this survey in light of the limitations of existing surveys and their contributions.

QC is underpinned by quantum mechanics, and hence often explained through concepts of superposition, interference, and entanglement. In quantum physics, a single bit can be in more than one state simultaneously (i.e., 1 and 0) at a given time, and a QC system leverages this very behavior and recognizes it as a qubit (Quantum bit). Having roots in quantum physics, QC has the potential of becoming the fabric of tomorrow’s highly powerful computing infrastructures, enabling the processing of gigantic amounts of data in real time. Quantum computing has recently seen a surge of interest from researchers who are looking to take computing prowess to the next level as we move past the era of Moore’s law, however, there is a need for an in-depth systematic survey to explain possibilities, pitfalls, and challenges.

QC is particularly well-suited to numerous compute-intensive applications of healthcare [1], especially in the current highly connected Internet of Things (IoT) digital healthcare paradigm [2,3], which encompasses interconnected medical devices (such as medical sensors) that may be connected to the Internet or the cloud. The massive increase in computational capacity is not only beneficial for healthcare IoT but can allow quantum computers to enable fundamental breakthroughs in this domain. When we leap from bits to qubits, it could improve healthcare pharmaceutical research [4], which includes analyzing the folding of proteins, determining how molecular structures, for instance, drugs and enzymes, fit together [5], determining strengths of binding interactions between a single biomolecule, for example, protein or DNA, and its ligand/binding partner such as a drug or inhibitor [6], and accelerating the process of clinical trials [7]. A few potential applications are briefly described next for an illustration. A quantum computer can perform extremely fast DNA sequencing, which opens the possibility for personalized medicine. It can enable the development of new therapies and medicines through detailed modeling. Quantum computers have the potential to create efficient imaging systems that can provide clinicians with enhanced fine-grained clarity in real time. Moreover, it can solve complex optimization problems involved in devising an optimal radiation plan that is targeted at killing cancerous cells without damaging the surrounding healthy tissues. QC is set to enable the study of molecular interactions at the lowest possible level, paving the pathway to drug discovery and medical research. Whole-genome sequencing is a time-demanding task, but with the help of qubits, whole-genome sequencing and analytics could be implemented in a limited amount of time. QC can revolutionize the healthcare system through modern ways of enabling on-demand computing, redefining security for medical data, predicting chronic diseases, and accurate drug discoveries.

As far as we understand, this is the first survey on quantum computing that considers security and privacy implications, applications and architecture, and quantum requirements and machine learning aspects of healthcare. There are some other surveys that consider a subset of these dimensions that merit discussion. Table 1 presents a comparative analysis of these surveys with the current work. Gyongyosi et al. [8] discuss computational limitations of traditional systems and survey superposition and quantum-entanglement-based solutions to overcome these challenges. However, this survey encompasses complex quantum mechanics without discussing its general-purpose implications for society. Fernández et al. [9] survey resource bottlenecks of IoT and discuss a solution based on quantum cryptography. They develop an edge-computing-based security solution for IoT, where management software is used to deal with security vulnerabilities. However, this is a domain-specific survey that only deals with security challenges. Gyongyosi et al. [10] discuss quantum channel capacities, which ease the quantum computing implementation for information processing. In this approach, conventional information processing is achieved through quantum channel capacities. The survey literature lists a few other quantum-computing works, including quantum learning theories [11,12], quantum information security [13,14,15,16], quantum machine learning (ML) [17,18], and quantum data analytics [19,20]. These surveys are limited in their coverage of quantum computing applications. Some of the existing works analyze the impacts of quantum computing implementation. Huang et al. [21] analyze the implementation vulnerabilities in quantum cryptography systems. Botsinis et al. [22] discuss quantum search algorithms for wireless communication. Cuomo et al. [23] survey existing challenges and solutions for quantum distributed solutions and proposed a layered abstraction to deal with communication challenges. Many of these surveys are only tangentially related to healthcare or do not consider healthcare at all.

| References | Year | Healthcare Focus | Security | Privacy | Architectures | Quantum Requirements | Machine/Deep Learning | Applications |

|---|---|---|---|---|---|---|---|---|

| Gyongyosi et al. [8] | 2019 | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Fernandez et al. [9] | 2019 | ✓ | ✓ | ✓ | ✓ | |||

| Gyongyosi et al. [10] | 2018 | ✓ | ✓ | |||||

| Arunachalam et al. [11] | 2017 | ✓ | ||||||

| Li et al. [12] | 2020 | ✓ | ✓ | |||||

| Shaikh et al. [19] | 2016 | ✓ | ✓ | ✓ | ✓ | |||

| Egger et al. [24] | 2020 | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Savchuk et al. [25] | 2019 | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Zhang et al. [13] | 2019 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Mcgeoch et al. [26] | 2019 | ✓ | ✓ | ✓ | ✓ | |||

| Shanon et al. [14] | 2020 | ✓ | ✓ | |||||

| Duan et al. [27] | 2020 | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Preskill et al. [28] | 2018 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Roetteler et al. [15] | 2018 | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Upretyet al. [20] | 2020 | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Rowell et al. [29] | 2018 | ✓ | ✓ | ✓ | ||||

| Padamvathi et al. [16] | 2016 | ✓ | ✓ | ✓ | ✓ | |||

| Nejatollahi et al. [30] | 2019 | ✓ | ✓ | ✓ | ✓ | |||

| Cuomo et al. [23] | 2020 | ✓ | ✓ | |||||

| Fingeruth et al. [31] | 2018 | ✓ | ✓ | |||||

| Huang et al. [21] | 2018 | ✓ | ✓ | ✓ | ✓ | |||

| Botsinis et al. [22] | 2018 | ✓ | ✓ | ✓ | ✓ | |||

| Ramezani et al. [17] | 2020 | ✓ | ✓ | ✓ | ||||

| Bharti et al. [18] | 2020 | ✓ | ✓ | ✓ | ✓ | |||

| Abbott et al. [32] | 2021 | ✓ | ✓ | ✓ | ||||

| Kumar et al. [33] | 2021 | ✓ | ✓ | ✓ | ✓ | |||

| Olgiati et al. [34] | 2021 | ✓ | ✓ | ✓ | ||||

| Gupta et al. [35] | 2022 | ✓ | ✓ | ✓ | ✓ | |||

| Kumar et al. [36] | 2022 | ✓ | ✓ | |||||

| Our Survey | 2022 | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

This survey systematically presents the evolution of quantum computing and its enabling technologies, explores the core application areas, and categorizes requirements for its implementation in high-performance healthcare systems, along with highlighting security implications. In summary, the salient contributions of this survey are as follows:

We present the first comprehensive review of quantum computing technologies for healthcare, covering its motivation, requirements, applications, challenges, architectures, and open research issues.

We discuss the enabling technologies of quantum computing that act as building blocks for the implementation of quantum healthcare service provisioning.

We discuss the core application areas of quantum computing and analyzed the critical importance of quantum computing in healthcare systems.

We review the available literature on quantum computing and its inclination toward the development of future-generation healthcare systems.

We discuss key requirements of quantum computing systems for the successful implementation of large-scale healthcare services provisioning and the security implications involved.

We discuss current challenges, their causes, and future research directions for an efficient implementation of quantum healthcare systems.

This paper is organized as follows. Table 2 shows acronyms and their definition. Section 2 discusses enabling technologies of quantum computing systems. Section 3 outlines the application areas of quantum computing. Section 4 discusses the key requirements of quantum computing for its successful implementation for large-scale healthcare services provisioning. Section 5 provides a taxonomy and description of quantum computing architectural approaches for healthcare architectures. Section 6 discusses the security architectures of the current quantum computing systems. Section 7 discusses current open issues, their causes, and promising directions for future research. Finally, Section 8 concludes the paper.

In this section, we present enabling technologies of quantum computing that support the implementation of modern quantum computing systems. Specifically, we categorize quantum-computing-enabling technologies in different domains, i.e., hardware structure, control processor plane, quantum data plane, host processor, quantum control and measurement plane, and qubit technologies.

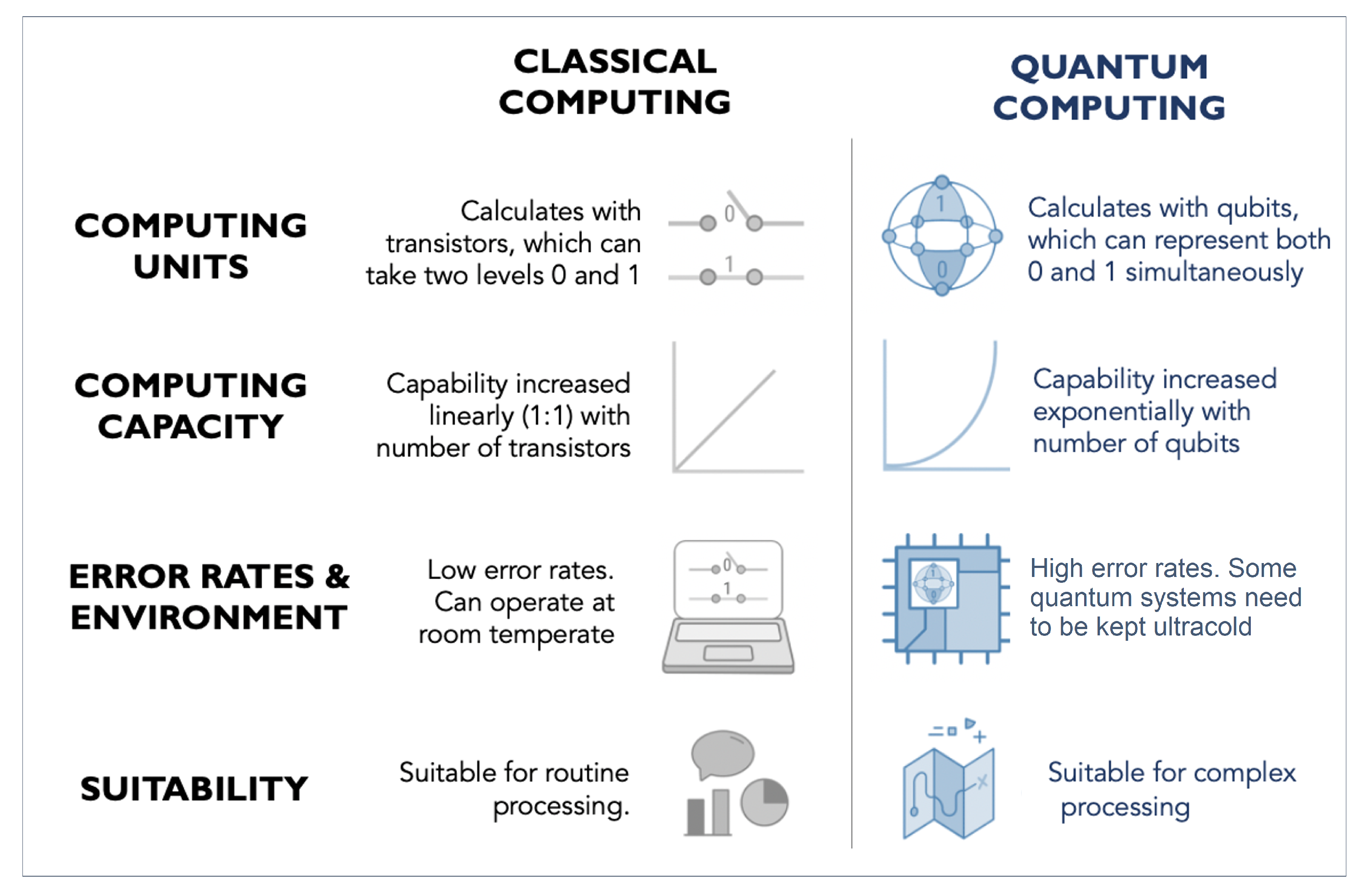

We refer the reader to Figure 1 for a differentiation of quantum computing paradigms with classical computing approaches in terms of their strengths, weaknesses, and applicability. Unlike conventional computers that operate in terms of bits, the basic units of operation in a quantum computer are referred to as quantum bits, or “qubits" , that possess two states or levels, i.e., they can represent a single bit in both ‘1’ and ‘0’ simultaneously.

Quantum physical systems, which leverage, for example, the orientation of a photon or spin of an electron, are used to create qubits. We note that quantum computers can come in various varieties, including one-qubit computers [37], two-qubit computers [38], and higher-qubit quantum computers. Key advancements in quantum computing were made earlier in the year 2000 when the very first 5-qubit quantum computer was invented [39]. Since then, many important advancements have been made so far, and the best-known quantum computer of the current era is IBM’s newest quantum-computing chip that contains 433 qubits [40]. However, the literature suggests that the minimum number of qubits to realize quantum supremacy is 50 [41]. Quantum supremacy is defined as the ability of a programmable quantum device, which is capable of solving a problem that cannot be solved by classical computers in a feasible amount of time [42]. The behavior of qubits relates directly to the behavior of a spinning electron orbiting an atom’s nucleus, which can demonstrate three key quantum properties: quantum superposition, quantum entanglement, and quantum interference [43].

Quantum superposition refers to the fact that a spinning electron’s position cannot be pinpointed to any specific location at any time. On the contrary, it is calculated as a probability distribution in which the electron can exist at all locations at all times with varying probabilities. Quantum computers rely on quantum superposition, in that they use a group of qubits for calculations and, while classical computer bits may take on only states 0 and 1, qubits can be either a 0 or 1, or a linear combination of both. These linear combinations are termed superposition states. Since a qubit can exist in two states, the computing capacity of a qubit quantum computer grows exponentially in the form of 2 q .

Quantum entanglement takes place in a highly intertwined pair of systems, such that knowledge of anyone immediately provides information about the other, regardless of the distance between them. This nonintuitive fact was described by Einstein as “spooky action at a distance”, because it went against the rule that information could never be communicated beyond light speed. Quantum entanglement in physics is when two systems such as photons or electrons are so highly interlinked that obtaining information about one’s state (for example, the direction of one electron’s upward spin) would provide instantaneous information about the other’s state, such as, for example, the direction of the second electron’s downward spin, no matter how far apart they are. Modifying one entangled qubit’s state therefore immediately perturbs the paired qubit’s state in quantum computers. Thereby, entanglement leads to the increased computational efficiency of quantum computers. Since processing one qubit provides knowledge about many qubits, doubling the number of qubits does not necessarily increase the number of entangled qubits. Quantum entanglement is therefore necessary for the exponentially faster performance of a quantum algorithm as compared with its classical counterpart.

Quantum interference occurs because, at the subatomic scale, particles have wavelike properties. These wavelike properties are often attributed to location, for example, where around a nucleus an electron might be. Two in-phase waves, which is to say they peak at the same time, constructively interfere, and the resulting wave peaks twice as high. Two waves that are out-of-phase, on the other hand, peak at opposite times and destructively interfere; the resulting wave is completely flat. All other phase differences will have results somewhere in between, with either a higher peak for constructive interference or a lower peak for destructive interference. In quantum computing, interference is used to affect probability amplitudes when measuring the energy level of qubits.

Quantum computing has applications in various disciplines, including communication, image processing, information theory, electronics, cryptography, etc. Practical quantum algorithms are emerging with the increasing availability of quantum computers. Quantum computing possesses a significant potential to bring a revolution to several verticals, such as financial modeling, weather precision, physics, and transportation (an illustration of salient verticals is presented in Figure 2). Quantum computing has already been used to improve different nonquantum algorithms being used in the aforementioned verticals. Moreover, the renewed efforts to envision physically scalable quantum computing hardware have promoted the concept that a fully envisioned quantum paradigm will be used to solve numerous computing challenges considering its intractable nature with the available computing resources.

The term quantum computing was first coined by Richard Feynman in 1981 and has since had a rich intellectual history. Figure 3 depicts a timeline of major events in this area. Noteworthy in the timeline is that while there were somewhat larger gaps between events earlier on, recently, the field has started experiencing a more rapid series of developments. For example service providers have begun offering niche quantum computing products, as well as quantum cloud computing services (e.g., Amazon Braket). Recently, Google’s 54-qubit computer accomplished a task in merely 200 s that was estimated to take around over 10,000 years on a classical computing system [44]. Nevertheless, quantum computing is still in its infancy stages, and it will take some time before quantum computing chips reach desktops or handhelds. An important factor inhibiting the commoditization of quantum computing is the fact that controlling quantum effects is a delicate process, and any noise (e.g., stray heat) can flip 1s or 0s and disrupt quantum effects, such as superposition. This requires qubits to be fully operated under special conditions, such as very cold temperatures, sometimes very close to absolute zero. This also motivates research exploring fault-tolerant quantum computing [45]. Considering this fast-paced development of quantum computing, this is an opportune time for healthcare researchers and practitioners to investigate its benefits to healthcare systems.

Since quantum computer applications often deal with user data and network components that are part of traditional computing systems, a quantum computing system should ideally be capable of interfacing with and efficiently utilizing traditional computing systems. Qubits systems require carefully orchestrated control for efficient performance; this can be managed using conventional computing principles. An analogue gate-based quantum computing system could be mapped into various layers for building a basic understanding of its hardware components. These layers are responsible for performing different quantum operations and consist of the quantum control plane, measurement plane, and data plane. The control processor plane uses measurement outcomes to determine the sequence of operations and measurements that are required by the algorithm. It also supports the host processor, which looks after network access, user interfaces, and storage arrays.

It is the main component of the quantum computing ecosystem. It broadly consists of physical qubits and the structures required to bring them into an organized system. It contains support circuits required to identify the state of qubits and perform gated operations. It does this for the gate-based system or controlling “the Hamiltonian for an analog computer” [46]. Control signals that are sent towards selected qubits set the Hamiltonian path, thereby controlling the gate operations for a digital quantum computer. For gate-based systems, a configurable network is provided to support the interaction of qubits, while analog systems depend on richer interactions in qubits enabled through this layer. Strong isolation is required for high qubit fidelity. It limits connectivity as each qubit may not be able to directly interact with every other qubit. Therefore, we need to map computation to some specific architectural constraints provided by this layer. This shows that connection and operation fidelity are prime characteristics of the quantum data layer.

In conventional computing systems, the control and data plane are based on silicon technology. Control of the quantum data plane needs different technology and is performed externally by separating control and measurement layers. Analog qubit information should be sent to the specific qubits. Control information is transmitted through (data plane) wires electronically, in some of the systems. Network communication is handled in a way that it retains high specificity, affecting only the desired qubits without influencing other qubits that are not related to the underlying operation. However, it becomes challenging when the number of qubits grows; therefore, the number of qubits in a single module is another vital part of the quantum data plane.

The role of the quantum plane is to convert digital signals received from the control processor. It defines a set of quantum operations that are performed in the quantum data plane on the qubits. It efficiently translates the data plane’s analog output of qubits to classical data (i.e., binary), which are easier to be handled by the control processor. Any difference in the isolation of the signals leads to small qubit signals that cannot be fixed during an operation, thus resulting in inaccuracies in the states of qubits. Control signals shielding is complex, since such signals must be passed via the apparatus that is used for isolating the quantum data plane from the environment. This could be performed using vacuums, cooling, or through both required constraints. Signal crosstalk and qubit manufacturing errors gradually change with the configuration change in the system. Even if the underlying quantum system allows fast operations, the speed can still be limited by the time required to generate and send a precise pulse.

This plane recognizes and invokes a series of quantum gate operations to be performed by the control and measurement plane. This set of steps implements a quantum algorithm via the host processor. The application should be custom-built, using specific functionalities of the quantum layer that are offered by the software tool stack. One of the critical responsibilities of the control processor plane is to provide an algorithm for quantum error correction. Conventional data processing techniques are used to perform different quantum operations that are required for error correction according to computed results. This introduces a delay that may slow down the quantum computer processing. The overhead can be reduced if the error correction is carried out in a comparable time to that of the time needed for the quantum operations. As the computational task increases with the machine size, the control processor plane would inevitably consist of more elements for increasing computational load. However, it is quite challenging to develop a control plane for large-scale quantum machines.

One technique to solve these challenges is to split the plane into components. The first component being a regular processor that can be tasked to run the quantum program, while the other component can be customized hardware to enable direct interaction with the measurement and control planes. It computes the next actions to be performed on the qubits by combining the controller’s output of higher-level instructions with the syndrome measurements. The key challenge is to design customized hardware that is both fast and scalable with machine size, as well as appropriate for creating high-level instruction abstraction. A low abstraction level is used in the control processor plane. It converts the compiled code into control- and measurement-layer commands. The user will not be able to directly interact with the control processor plane. Subsequently, it will be attached to the computing machine to fasten the execution of a few specific applications. Such kind of architectures have been employed in current computers that have accelerators for graphics, ML, and networking. These accelerators typically require a direct connection with the host processors and shared access to a part of their memory, which could be exploited to program the controller.

Shor’s algorithm [47] opened the gate to possibilities for designing adequate systems that could implement quantum logic operations. There are many qubit systems, e.g., photon, solid-state spins, trapped-ion qubits, and superconducting qubits. Trapped-ion qubits and superconducting qubits are the two most promising platforms for quantum computing, and they are explained in the following subsections.

“The first quantum logic gate was developed in 1995 by utilizing trapped atomic ions” that were developed using a theoretical framework proposed in the same year [48]. After its first demonstration, technical developments in qubit control have paved the way toward fully functional processors of quantum algorithms. The small-scale demonstration shows promising results; however, trapped ions remain a considerable challenge. As opposed to Very Large Circuits Integration (VLSI), developing a trapped-ion-based quantum computer requires the integration of a range of technologies, including optical, radiofrequency, vacuum, laser, and coherent electronic controllers. However, the integration challenges associated with trapped-ion qubits must be thoroughly addressed before deploying a solution.

A data plane consists of ions and a mechanism to trap those into desired positions. The measurement and control plane contains different lasers to perform certain operations, e.g., a precise laser source is used for inflicting a specific ion to influence its quantum state. Measurements of the ions are captured through a laser, and the state of ions is detected through photon detectors.

Superconducting qubits share some common characteristics with today’s silicon-based circuits. These qubits, when cooled, show quantitative energy levels due to quantified states of electronic-charge. The fact that they operate at a nanosecond time scale, have continuous improvement in coherence times, and their ability to utilize lithographic scaling make them an efficient solution for quantum computing. Upon the convergence of these characteristics, superconducting qubits are considered both for quantum computation and quantum annealing.

In this section, we discus enabling technologies of quantum computing. We found that the key characteristics of a quantum data plane are the error rates of the single- and two-qubit gates. Furthermore, qubit coherence times, interqubit connection, and the qubits within a single module are vital in the quantum data plane. We also explained that the quantum computer’s speed is limited by the precise control signals that are required to perform quantum operations. The control processor plane and host computer run a traditional OS equipped with libraries for its operations that provides software development tools and services. It runs the software development tools that are essential for running the control process. These are different from the software that runs on today’s conventional computers. These systems provide capabilities of networking and storage that a quantum application might require during execution. Thus, connecting a quantum process to a traditional computer enables it to leverage all its features without starting from scratch.

Recent research shows that quantum computing has a clear advantage over classical computing systems. Quantum computing provides an incremental speedup of disease diagnosis and treatment and, in some use cases, can drastically reduce the computation times from years to minutes [33,49]. It provokes innovative ways of realizing a higher level of skills for certain tasks, new architectures, and strategies. Therefore, quantum computing has an immense potential to be employed for a wide variety of use cases in the health sector in general and for healthcare service providers in particular, especially in the areas of accelerated diagnoses, personalized medicine, and price optimization. A literature survey shows that there is a visible increase in the use of classical modeling and quantum-based approaches, primarily due to the improvement in access to worldwide health-relevant data sources and availability. This section brings forward some potential use cases for the applications of quantum computing in healthcare; an illustration of these use cases is presented in Figure 4.

Quantum computers tend to process data in a fundamentally novel way using quantum bits, as compared with classical computing, where integrated circuits determine the processing speed [50]. Quantum computers, unlike storing information in terms of 0 s and 1 s, use the phenomena of quantum entanglement, which paves the way for the quantum algorithms countering classical computing, which is not designed to benefit from this phenomenon [51]. In the healthcare industry, quantum computers can exploit ML, optimization, and artificial intelligence (AI) to perform complex simulations [52]. Processes in healthcare often consist of complex correlations and well-connected structures of molecules with interacting electrons. The computational requirements for simulations and other operations in this domain naturally grow exponentially with the problem size, with time always being the limiting factor [53]. Therefore, we argue that quantum-computing-based systems are a natural fit for the use case.

The domain of precision medicine focuses on providing prevention and treatment methodologies for individuals’ healthcare needs [54]. Due to the complexity of the human biological system, personalized medicine will be required in the future that will go beyond standard medical treatments [54]. Classical ML has shown effectiveness in predicting the risk of future diseases using EHRs [34]. However, there are still limitations in using classical ML approaches due to quality and noise, feature size, and the complexity of relations among features. This provokes the idea of using quantum-enhanced ML, which could facilitate more accurate and granular early disease discovery [50]. Healthcare workers may then use tools to discover the impact of risks on individuals in given condition changes by continual virtual diagnosis based on continuous data streams. Drug sensitivity is an ongoing research topic at a cellular level considering genomes features of cancer cells. Ongoing research is discovering the chemical properties of drug models that could be used for predicting cancer efficiency at a granular level. Quantum-enhanced ML could expedite breakthroughs in the healthcare domain, mainly by enabling drug inference models [55,56].

Precision medicine has the goal of identifying and explaining relationships among causes and treatments and predicting the next course of action at an individual level. Traditional diagnosis based on the patient’s reported symptoms results in umbrella diagnoses, where the related treatments tend to sometimes fail [57]. Quantum computing could help in utilizing continuous data streams using personalized interventions in predicting diseases and allowing relevant treatments. Quantum-enhanced predictive medicine optimizes and personalizes healthcare services using continuous care [58]. Patient adherence and engagement at individual-level treatments could be supported by quantum-enhanced modeling. A use case of quantum-computing-based precision medicine is illustrated in Figure 5.

Early diagnosis of the diseases could render better prognosis and treatment, as well as lower the healthcare cost. For instance, it has been shown in the literature that the treatment cost lowers by a factor of 4, whereas the survival rate could be decreased “by a factor of 9 when the colon cancer is diagnosed at an early stage” [59]. In the meantime, the current diagnostics and treatment for most of the diseases are costly and slow, having deviations in the diagnosis of around 15–20% [60]. The use of X-rays, CT scans, and MRIs has become critical over the past few years with computer-aided diagnostics developing at a faster pace. In this situation, diagnoses and treatment suffer from noise, data quality, and replicability issues. In this regard, quantum-assisted diagnosis has the potential to analyze medical images and oversee the processing steps, such as edge detection in medical images, which improves the image-aided diagnosis.

The current techniques use single-cell methods for diagnosis, while analytical methods are needed in single-cell sequencing data and flow cytometry. These techniques further require advanced data analytic methods, particularly combining datasets from different techniques. In this context, cell classification on the basis of biochemical and physical attributes is regarded as one of the main challenges. While this classification is vital for critical diagnoses, such as cancerous cell integration from healthy cells, it requires an extended feature space where the predictor variable becomes considerably larger. Quantum ML techniques, such as quantum vector machines (QVM), enable such classifications and enable single-cell diagnostic methods. The discovery and characterization of biomarkers pave the way for the study of intricate omics datasets, such as metabolomics, transcriptomics, proteomics, and genomics. These processes could lead to increased feature space, provoking complex patterns and correlations that are nearly impossible to be analyzed using classical computational methodologies.

During the diagnosis process, quantum computing may help to support the diagnostics insights, eliminating the need for repetitive diagnosis and treatment. This paradigm helps in providing continuous monitoring and analysis of individuals’ health. It also helps in performing meta-analysis for cell-level diagnosis to determine the best possible procedure at a specific time. This could help to reduce the cost and allow extended data-driven diagnosis, bringing value for both medical practitioners and individuals.

Radiation therapy has been employed for the treatment of cancers; it uses radiation beams to eliminate cancerous cells to stop them from multiplying. However, radiotherapy is a sensitive process which requires highly precise computations to drop the beam on the cancer-causing tissues and avoid any impact on the surrounding healthy body cells. Radiography is performed using highly precise computers and involves a highly precise optimization problem to perform the precise radiography operation, which requires multiple precise and complex simulations to reach an optimal solution. Through quantum computing, running simultaneous simulations and figuring out a plan in an optimal time becomes possible, and hence the spectrum of opportunities is very vast if quantum concepts are employed for simulations.

Quantum computing enables medical practitioners to model atomic-level molecular interactions, which is necessary for medical research [61]. This will be particularly essential for diagnosis, treatment, drug discovery, and analytics. Due to the advancements in quantum computing, it is now possible to encode tens of thousands of proteins and simulate their interactions with drugs, which has not been possible before [35]. Quantum computing helps process this information more effectively by orders of magnitude as compared with conventional computing capabilities [62]. Quantum computing allows doctors to simultaneously compare large collections of data and their permutations to identify the best patterns. Detection of biomarkers specific to a disease in the blood is now possible through gold nanoparticles by using known methods, such as bio-barcode assay. In this situation, the goal could be to exploit the comparisons used to help the identification of a diagnosis [63].

Identifying small molecules, macromolecules, and other molecular formations that develop into drugs that treat or cure diseases is a core activity of pharmaceutical companies. Many important drugs have been discovered in the past by way of scientific fortuity, with some notable examples being penicillin, chloral hydrate, LSD, and the smallpox vaccine. To tackle modern-day challenges such as those related to climate change and the COVID-19 virus, chemists cannot rely on luck alone. Modern-day drug discovery requires precise modeling of the energies dissipated in chemical reactions. Classical computers rely heavily on approximations for this, because even just calculating the quantum behavior of a couple of electrons involves very time-consuming computation. This reduces the precision and value of the model and puts the onus on the chemist to guide the model and validate the results in the lab. Converselt, quantum computers are already reliably modeling the properties of small molecules, such as lithium hydride [64], and have been shown to benefit quantum chemistry calculations requiring an explicit depiction of the wave function [65] because of high system entanglement and because they simulate properties at high accuracies. Finally, researchers have developed several quantum algorithms for chemistry, such as those that estimate the ground-state energies of molecular Hamiltonians and those that compute molecular reaction rates that are superior to their classical computing counterparts.

Research by Huggins et al. [66] has demonstrated that it is even possible to accurately compute circuitry exhibiting noise with quantum chemistry. The researchers utilized a maximum of 16 qubits on Google’s 53-qubit quantum computer to run a Monte Carlo simulation developed for physics models consisting of fermions that comprise electrons. The H4 molecules, molecular nitrogen, and solid diamond involving a maximum of 120 electron orbitals were simulated.

Creating pricing strategies is considered one of the key challenges that contribute to the complexities of the healthcare ecosystem [67]. In pricing analysis, quantum computing helps in risk analysis by predicting the current health of patients and predicting whether the patient is prone to a particular disease [68]. This is useful for optimizing insurance premiums and pricing [1]. A population-level analysis of disease risks, and mapping that to the quantum-based risk models, could help in computing financial risks and pricing models at a finer level. One of the key areas which could support pricing decisions is the detection of fraud; healthcare frauds cost billions of dollars in revenue [69]. In this regard, traditional data mining techniques offer insights into detecting and reducing healthcare fraud. Quantum computing could provide higher classification and pattern detection performance, thus uncovering malicious behavior attempting fraudulent medical claims. This could in turn help in better management of pricing models and lowering the costs associated with frauds [70]. Quantum computing can substantially accelerate pricing computations as well, resulting in not only lowering the premiums but also in developing customized plans [71].

Different tests and systems, based on historical data, MRIs, CT scans, etc., could possibly become one of the quantum computing applications. Quantum computing could help in performing DNA sequencing, which takes 2–3 months using classical computing. It could also help perform cardiomyopathy analysis for DNA variants promptly. Although the growth of quantum computing brings novel benefits to healthcare, the broad use of novel quantum techniques may provoke security challenges. Therefore, there is a need to invest in quantum computing for better healthcare services provisioning. Furthermore, vaccine research could be automated more efficiently. Moreover, there is a need to allocate distributed quantum computing, where a quantum supercomputer distributes its resources using the cloud.

Quantum-enhanced computing can decrease processing time in various healthcare applications. However, the requirements of quantum computing for healthcare could not be generalized across different applications. For instance, drug discovery requirements are different from vaccination development systems. Therefore, quantum computing applications in healthcare require consideration of multiple factors for effective implementation. Table 3 outlines the requirements of quantum computing for a successful operation of healthcare systems, which are elaborated below.

Low computational time is one of the major requirements of any healthcare application. The classical computers having CPUs and GPUs are not capable of solving certain complex healthcare problems, e.g., simulating molecular structures. This motivates the need for using quantum computing that can exploit vast amounts of multidimensional spaces to represent large problems. A prominent example illustrating the power of quantum computing can be seen in Grover’s search algorithm [72], which is used to search from a list of items. For instance, if we want to search a specific item in N number of items, we have to search N 2 items on average or, in the worst case, check all N items. Grover’s search algorithm searches all these items by checking n items. This demonstrates remarkable efficiency in computational power. Let us assume we want to search through 1 trillion items, and every item takes 1 microsecond to check; it will take only 1 second for a quantum computer.

Fifth-generation (5G) connectivity has become an essential technology connecting smart medical objects. It provides extremely robust integrity, lower latency, higher bandwidth, and has an extremely large capacity. IoT objects work by transferring data to edge/cloud infrastructure for processing. Cloud storage suffers from security issues from a user perspective, thus raising novel challenges associated with the availability, integrity, and confidentiality of data. Quantum computing can gain benefits from 5G/6G networks to provide novel services. Quantum walks deliver a universal processing model and inherent cryptographic features to deliver efficient solutions for the healthcare paradigm. Quantum walks are the mechanical counterparts of traditional random walks that allow to develop novel quantum algorithms and protocols using high-speed 5G/6G networks.

A few examples of using quantum walks for designing secure quantum applications include pseudorandom number generators, substituting boxes, quantum-based authentication, and image encryption protocols. This could help in providing secure ways to store and transmit data using high-speed networks. In a cryptography mandate for secure transmission of information, the entity’s data are encrypted before sending them over the cloud. In this context, key management, encryption, decryption, and access control are taken care of by the entities. This could be for novel research exploiting quantum technologies using 5G healthcare to enhance performance and resist attacks from classical and quantum scenarios.

Quantum communication (QC) is a quantum technologies subbranch that concerns the distribution of quantum states of light for accomplishing a particular communication task [73,74]. The potential use of QC in commercial applications has been gaining popularity recently. Two leading technologies of QC include quantum key distribution (QKD) and quantum random-number generation (QRNG). QKD enables private communication by allowing remote entities to share a secret key, and together, these promise to enable the perfect secrecy protocol to provide resistance to external attacks. The goal of the quantum Internet [75,76] is to develop a quantum communication network that connects quantum computers together to achieve quantum-enhanced network security, synchronization, and computing. Qirg is an IETF quantum Internet research group that is responsible for the standardization process of the quantum Internet.

Quantum information has been strongly influenced by modern technological paradigms. The literature shows that high-dimensional quantum states are of increasing interest, especially with respect to quantum communication. Hilbert space provides numerous benefits, such as large information capacity and noise resilience [77]. Moreover, the authors in [77] explored “multiple photonic degrees of freedom for generating high-dimensional quantum states”, using both integrated photonics and bulk optics. Different channels were spun up for propagation of the quantum states, e.g., single-mode, free-space links, aquatic channels, and multicore and multimode fibers.

Highly connected quantum states that are continuously interacting are challenging to simulate considering their many-body Hilbert vector space that increases with the growing number of particles. One of the promising methods to improve scalability is using the methods of transfer learning. It dictates reusing the capability of ML models to solve potentially similar yet different problem classes. By reusing features of the neural network quantum states, we can exploit physics-inspired transfer learning protocols.

It has been verified that even simple neural networks (i.e., Boltzmann machines [78]) can precisely imitate the state of many-body quantum systems. Transfer learning uses the same trained model to be used for another task that is trained from a similar system with a larger size. In this regard, various physics-inspired protocols can be used for transfer learning to achieve scalability. FPGAs can also be used to emulate quantum computing algorithms, providing higher speed as compared with software-based simulations. However, required hardware resources to emulate quantum systems become a critical challenge. In this regard, scalable FPGA-based solutions could provide more scalability.

Fault tolerance in quantum computers is extremely necessary, as the components are connected in a fragile entangled state. This makes quantum computers robust and introduces ways to solve quantum problems, leading to the high fidelity of quantum computations. This allows quantum computers to perform computations that were challenging to process in traditional computing. However, during processing, any error in the qubit or in the mechanism of measuring the qubit will bring devastating consequences for the systems depending on those computations. The system of correcting errors itself suffers from major issues. A feasible way of monitoring these systems is to monitor qubits using ancillary qubits, which constantly analyze the logical errors for corrections and detection. Ancillary qubits have already shown promising results, but errors themselves in ancillary qubits may lead to errors in qubits, thereby inflicting more errors in the operation. Error correction code could be embedded among the qubits, allowing the system to correct the code when some bits are wrong. It helps in faulty error propagation by ensuring that a single faulty gate or time stamp produces a single faulty gate.

In traditional systems, computing is performed in close proximity to the devices. However, quantum computers are located far away from users’ locality. If you want to share a virtual machine hosted on a quantum computer, it is challenging to access such a virtual machine; therefore, the availability requirements of quantum computers should be addressed carefully.

One of the requirements in layered quantum computing is the deployment of quantum gates. In this scenario, each quantum gate has the responsibility to perform specific operations on the quantum systems. Quantum gates are applied in multiple quantum computing applications due to hardware restrictions, such as the no-cloning theorem, which makes it challenging for a given quantum system to coordinate in more than one quantum gate simultaneously [79]. In this paradigm, the requirement of coupling topology arises; qubit-to-qubit coupling is one such example where the circuit depth relies on the fidelity of the involved gates.

Paler et al. [80] proposed a quantum approximate optimization algorithm (QAOA), which solves the challenge of combinatorial optimization problems. In this technique, the working mechanism depends on the positive integer, which is directly related to the quality of the approximation. Farhi et al. [81] applied QAOA using a set of linear equations containing exactly three Boolean variables. This algorithm brings different advantages over traditional algorithms and efficiently solves the input problem. In [82], the authors used gate-model quantum computers for QAOA. This algorithm converges to a combinatorial optimization problem as input and provides a string output satisfying a higher “fraction of the maximum number of clauses”. Farhi et al. [83] proposed QAOA for fixed qubit architectures that provide a method for programming gate models without considering requirements of error correction and compilation. The proposed method uses a sequence of unitaries that reside on the qubit-layout-generating states. Meter et al. [84] developed a blueprint of a multicomputer using Shor’s factoring algorithm [85]. A quantum-based multicomputer is designed using a quantum bus and nodes. The primary metric was the performance of the factorization process. Several optimization methods make this technique suitable for reducing latency and the circuit path.

Large-scale quantum computers could be realized by distributed topologies due to physical distances among quantum states. A quantum bus is deployed for the communication of quantum computers, where quantum algorithms (i.e., error correction) are run in a distributed topology. It requires a coordinated infrastructure and a communication protocol for distributed computation, communication, and quantum error correction for quantum applications. A system area networks model is required to have arbitrary quantum hardware handled by communication protocols.

Van Meter et al. [86] performed an experimental evaluation of different quantum error correction models for scalable quantum computing. Ahsan et al. [87] proposed a million-qubit quantum computer, suggesting the need “for large-scale integration of components and reliability of hardware technology using” simulation and modeling tools. In [88], the authors proposed quantum generalization for feed-forward neural networks, showing that the classical neurons could be generalized with the quantum case with reversibility. The authors demonstrate that the neuron module can be implemented photonically, thus making the practical implementation of the model feasible. In [89], the authors present an idea of using quantum dots for implementing neural networks through dipole–dipole interactions and showed that the implementation is versatile and feasible.

The current implementation of quantum computers can be grouped into four generations [86]. The first-generation quantum computers could be implemented by ion traps, where KhZ represents physical speed and Hz shows the logical speed having footprints in the range of mm–cm [87,90,91,92,93,94,95]. Second-generation quantum computers can be implemented by distributed diamonds, superconducting quantum circuits, and linear optical strategies. The physical speed of these computers ranges from MhZ, whereas logical speed constitutes the KhZ range, having a footprint size of —mm. The third-generation quantum computers are based on monolithic diamonds, donor, and quantum dot technologies. Their logical speed corresponds to MhZ, while their physical speed ranges in GHz having a footprint size of —um. Topological quantum computing is used in fourth-generation quantum computers in the evolutionary stage. This generation of quantum computers does not need any quantum error correction, having the natural protection of decoherence. In order to address an open problem of enabling distributed quantum computing via anionic particles, Monz et al. [96] propose a practical realization of the scalable Shor algorithm on quantum computers. This work does not discuss the algorithm’s scalability and mainly demonstrates various implementations of a factorization algorithm on multiple architectures.

Quantum AI and quantum ML are emerging fields; therefore, requirements analyses of both fields from the perspective of experimental quantum information processing is necessary. Lamata [97] studied the implementation of basic protocols using superconducting quantum circuits. Superconducting quantum circuits are implemented for realizing computations and quantum information processing. In [98], the authors proposed a quantum recommendation system, which efficiently samples from a preference matrix, that does not need a matrix reconstruction. Benedetti et al. [99] proposed a classical quantum DL architecture for near-term industrial devices. The authors presented a hybrid quantum–classical framework to tackle high-dimensional real-world ML datasets on continuous variables. In their proposed approach, DL is utilized for low-dimensional binary data. This scheme is well-suited for small-scale quantum processors and mainly for training unsupervised models. An overview of 40 theoretical and experimental (proof-of-concept) quantum technologies for three clinical use cases (that include (1) genomics and clinical research; (2) diagnostics; and (3) treatments and interventions) is presented in [63]. Furthermore, this research also elaborates upon the use of quantum machine learning using real clinical data, e.g., quantum neural networks and quantum support vector classifiers.

In this section, we present novel requirements for healthcare systems implementation using quantum computing. Quantum computing for healthcare requires consideration of the diverse requirements of different infrastructures. Therefore, an effective realization of quantum healthcare systems requires healthcare infrastructure to be upgraded to coordinate with the high computational power provided by quantum computing.

This section presents an overview of the existing literature focused on developing quantum computing architecture for healthcare applications. We start this section by first providing a brief overview of general quantum computing architecture.

Different components of quantum computing are integrated to form a quantum computing architecture. The basic elements of a classical quantum computer are its quantum states (i.e., qubits), the architecture used for fault tolerance and error correction, the use of quantum gates and circuits, the use of quantum teleportation, the use of solid-state electronics [100], etc. The design and analysis of these components and their different architectural combinations have been widely studied in the literature. For instance, most of the proposed/developed quantum computing architectures are layered architectures [101,102], which are a conventional approach to the design of complex information engineering architectures. So far, many researchers have provided different perspectives and guidelines to design quantum computer architectures [103,104]. For instance, the fundamental criteria for viable quantum computing were introduced in [105], and the need for a quantum error correction mechanism within the quantum computer architecture is emphasized in [106,107]. Ref. [108] presents a comparative analysis of IBM Quantum vs fully connected trapped ions.

The rapid advancement in quantum computers would be of little use to chemists in developing lifesaving drugs if there exist limited algorithms for healthcare applications that are optimized for these systems. Modern quantum algorithms are mostly hybrid, in that they leverage both classical as well as quantum computers. The need for the design of hybrid algorithms for healthcare applications is of utmost importance. Firstly, they allow these applications to utilize computationally superior quantum hardware. Secondly, even if they do not fully utilize quantum hardware capability, they still promote innovation in quantum-inspired classical algorithms that surpass the originals. Together, both hybrid and quantum-inspired methods will eventually evolve into pure quantum algorithms as the field matures. Both hybrid and quantum-inspired methods are organic evolutionary stages toward realizing pure quantum methods.

Sengupta et al. [109] developed a quantum algorithm for quicker clinical prognostic analysis of COVID-19 patients. They leveraged the growing class of quantum machine learning (QML) algorithms, which essentially grew from quantum computing theory. The idea is to employ quantum computing for machine learning tasks for solution parallelism for optimal constraint solving using Moore’s law. The researchers report good performance for large-scale biased CT-scanned image classification due to efficient quantum simulation and fast convergence.

Mehboob et al. [110] design a multiobjective quantum-inspired genetic algorithm (MQGA) to solve the problem of healthcare deadline scheduling. The motivation is that missing the deadline for healthcare applications may have dire consequences, such as patient injury or fatality. The proposed algorithm models healthcare applications as workflows, represented as directed cyclic graphs (DAGs). Individual tasks within the workflow constitute a deadline to guarantee the quality of service (QoS).

There are several large initiatives that aim to help translate quantum algorithmic research into practical healthcare-related software. The UK has allocated GBP 8.4 billion for the development of a quantum-enhanced computing platform [111] for pharmaceutical R&D, and many pharmaceuticals are collaborating with organizations experimenting with quantum computing to utilize quantum algorithms for drug discovery. For instance, the biotech company Biogen [112] is using the technology to develop novel neurological disease candidates, such as for fighting Alzheimer’s.

Different quantum-computing-based approaches can be noted in the literature. For instance, Liu et al. [113] proposed a logistic regression health assessment model using quantum optimal swarm optimization to detect different diseases at an early stage. Javidi [114] studied various research works that use 3D approaches for image- visualization and quantum imaging under photon-starved conditions and proposed a visualization. Childs et al. [49] proposed a study using cloud-based quantum computers exploiting natural language processing on electronic healthcare data. Datta et al. [115] proposed “Aptamers for Detection and Diagnostics (ADD) and developed a mobile app acquiring optical data from conjugated quantum nanodots to identify molecules indicating” the presence of the SARS-CoV-2 virus. Koyama et al. [116] proposed a midinfrared spectroscopic system using a pulsed quantum cascade and high-speed wavelength-swept laser for healthcare applications, e.g., blood glucose measurement. Naresh et al. [117] proposed a quantum DH extension to dynamic quantum group key agreement for multiagent systems-based e-healthcare applications in smart cities.

Janani et al. [118] proposed quantum block-based scrambling and encryption for telehealth systems (image processing application); their proposed approach has two levels of security that work by selecting an initial seed value for encryption. The proposed system provides higher security against statistical and differential attacks. However, the proposed system produces immense overhead during complex computations of quantum cryptography. Qiu et al. [119] proposed a quantum digital signature for the access control of critical data in the Big Data paradigm that involves signing parties, including the signer, the arbitrator, and the receiver. The authors did not propose a new quantum computer; rather, they implemented a quantum protocol that does not put more overhead on the network. However, this scheme does not consider sensitive data transferred from the source to the destination during the proposed quantum computing implementation. Al-Latif et al. [120] proposed a quantum walk-based cryptography application, which is composed of substitution and permutations.

In a recent study [121], a hybrid framework based on blockchain and quantum computing was proposed for an electronic health record protection system, where blockchain is used to assign roles to authorize entities in the network to access data securely. However, the performance of the proposed system suffers, as the quantum computing and blockchain infrastructure pose immense network overhead. Therefore, the performance of the proposed system should be assessed intuitively before its actual deployment. Latif et al. [122] proposed two novel quantum information hiding techniques, i.e., a steganography approach and a quantum image watermarking approach. The quantum steganography methodology hides a quantum secret image into a cover image using a controlled-NOT gate to secure embedded data, and the quantum watermarking approach hides a quantum watermarking gray image into a carrier image. Perumal et al. [123] propose a quantum key management scheme with negligible overhead. However, this scheme lacks a comparison with the available approaches to demonstrate its efficacy.

Helgeson et al. [124] explored the impact of clinician awareness of quantum physics principles among patients and healthcare service providers and show that the principles of physics improve communication in the healthcare paradigm. However, this study is based on survey-based analysis, which did not provide an actual representation of the quantum healthcare implementation paradigm. An implementation-level study should be conducted based on the findings of this research to identify its implications. Similarly, Hastings et al. [125] suggested that healthcare professionals must be aware of the fact that quantum computing involves extensive mathematical understanding to ensure efficient services of quantum computing in healthcare applications. Similarly, Grady et al. [126] suggested that leadership in the quantum age requires engaging with stakeholders and resonating with creativity, energy, and products of the work that results from the mutual efforts enforced by the leaders. On a similar note, we argue that the quantum computing architecture for healthcare applications should be developed by considering the important requirements that we have identified in this paper (which are discussed in detail in Section 4 and are summarized in Table 3).

In summary, this section discusses state-of-the-art quantum computing healthcare literature. Table 4 shows a comparison of the available approaches in terms of different parameters. We defined key parameters based on quantum computing usage in the healthcare paradigm. Most of the existing studies do not consider IoT implementation in the quantum healthcare paradigm. Therefore, there is a need for IoT implementation in healthcare due to its greater implication in healthcare services provisioning.

As healthcare applications are essentially life-critical, therefore, ensuring their security is fundamentally important. However, a major challenge faced by healthcare researchers is the siloed nature of healthcare systems that impedes innovation, data sharing, and systematic progress [127]. Furthermore, Chuck Brooks, a leader in cybersecurity and the chair of the Quantum Security Alliance, suggests that effective implementation of security should allow academia, industry, researchers, and governments to collaborate effectively [128]. Security of a quantum computing system is also very important, as it can enable exponential upgradation of computing capacities, which can put at risk current cryptographic-based approaches. Whereas cryptography has been considered the theoretical basis for healthcare information security, quantum computing using cryptography exploits the combination of classical cryptography and quantum mechanics to offer unconditional security for both sides of healthcare communication among healthcare service consumers. Quantum cryptography has become the first commercially available use case of quantum computing. Quantum cryptography is based on the fundamental laws of mechanics rather than unproven complex computational assumptions. A taxonomy of key security technologies that could help healthcare information security is presented in Figure 6 and described below.

Frequent recycling of strong cryptographic keys in healthcare IoT devices and terminals that are placed in public spaces plays an important factor in mitigating the increasing number of medical data breach incidents plaguing the healthcare system. Quantum key distribution (QKD) is a protocol that is used to authorize two components by distributing a mutually agreed key to ensure secure transmission. QKD protocol uses certain quantum laws (which are generally based on complex characteristics of quantum computing) to detect information extraction attacks. Specifically, QKD leverages the footprints left when an adversary attempts to steal the information for attack detection. QKD allows the generation of arbitrarily long keys, and it will stop the keys generation process if an attack is detected. The first QKD technique, known as BB84, was proposed by Gillies Brassed [129], and it is the most widely used method in theoretical research on quantum computing. QKD has enormous potential in helping to overcome key management and distribution limitations in classical algorithms. Shor et al. [130] presented the proof of the BB84 technique by relating the security to the entanglement purification protocol and the quantum error correction code. Devi et al. [2] suggest utilizing QKD using an enhanced version of the BB84 protocol for sharing keys between communicating entities in the remote health monitoring of patients using wireless body sensor networks. Their results demonstrate that the approach helps secure the sensed data being transmitted across the sensor network to the physician from attacks. Perumal et al. [123] designed an optimized QKD technique for heterogeneous medical devices. The communication is set up using quantum channels between authorized parties, and the key server distributes the encryption key in terms of qubits over the quantum channel. In the literature, substantial research has been conducted using the QKD security protocol, and several novel improvements in the quantum computing security paradigm using QKD protocol have been made so far.

Quantum D-level systems are attractive for healthcare since they are characterized by higher data transfer rates that are required for next-generation medical sensors. Consider the case of implantable brain–machine interface systems where a huge amount of neural data [131] is transmitted by thousands of electrodes monitoring the brain tissue in the different cortical layers. With regard to QKD, the d-level protocols promise to increase the transmitted key rate, as well as provide greater error resistance. In [132], the authors used d-level systems to protect against individual and concurrent attacks. They discussed two cryptosystems, where the first system uses two mutually unbiased bases, while the second utilizes d+1 concurrently unbiased bases. The proof of security for the protocols with entangled photons for individual attacks has been demonstrated by [133]. However, the challenge with this approach was the increased error rate. In [134], the authors proposed the decoy pulse method for BB84 in high-loss rate scenarios. A privileged user replaces signal pulses with multiphoton pulses. The security proof of coherent-state protocol using Gaussian-modulated coherent state and homodyne detection against arbitrary coherent attacks is provided in [135]. In [136], authors proposed security against common types of attacks that could be inflicted on the quantum channels by eavesdroppers having vast computational power. The security of device-independent (DI) QKD against collective attacks has been analyzed in [137], which has been extended by [138] with a more general form of attacks. A passive approach for security using a beam divider to segregate each input pulse and demonstrate its effectiveness is presented in [139]. Table 5 presents a taxonomy and summary of different approaches focused on using d-level systems as a defense strategy to withstand security attacks.

A system that relies on quantum computing for healthcare processing and security is vulnerable to a variety of security risks. These include, but are not limited to, authentication, interception, substitution, man-in-the-middle, protocol, and denial of service. In this section, we present existing defense approaches to withstand different general attacks against quantum computing systems. For instance, Maroy et al. [140] proposed a defense strategy for BB84 that enforces security with random individual imperfections concurrently in the quantum sources and detectors. Similarly, Pawlowski et al. [141] proposed a semi-device-independent defense scheme against individual attacks that provides security when the devices are assumed to devise quantum systems of a given dimension. In [142], authors presented a defensive scheme for a greater number of quantum protocols, where the key is generated by independent measurements. A comparative analysis of secret keys that violate Bell inequality is presented in [143]. The authors suggested that any available information to the eavesdroppers should be consistent with the nonsignaling principle.

Leverrier et al. [144] evaluated “the security of Gaussian continuous variable QKD with coherent states against arbitrary attacks in the finite-size scheme”. In a similar study, Morder et al. [145] presented a method to evaluate the security aspects of a practical distributed-phase-reference QKD against general attacks. A framework for the continuous-variable QKD is presented in [146], which is based on an orthogonal frequency division multiplexing scheme. A comprehensive security analysis of continuous-variable MDI QKD in a finite-sized scenario is presented in [147], and defense against generic DI QKD protocols is presented in [148]. In [149], authors presented a method “to prove the security of two-way QKD protocols against the most general quantum attack on an eavesdropper, which is based on an entropic uncertainty” relation. In [150], authors particularly defined the perspective of Eckert’s original entanglement protocol against a general class of attacks. A taxonomy summarizing different defenses against general security attacks is presented in Table 6.

During the past few years, the finite key analysis method has become a popular security scheme for QKD, which has been integrated into the composable unconditional security proof. In [152], authors attempt to address the security constraints of finite-length keys in different practical environments of BB84 that include prepare and measure implementation without decoy state and entanglement-based techniques. Similarly, the finite-key analysis of MDI QKD presented in [153] works by removing the major detector channels and generating different novel schemes of the key rate that is greater than that of a full-device-independent QKD. The security proof against the general form of attacks in the finite-key regime is presented in [154]. The authors present the feasibility of long-distance implementations of MDI QKD within a specific signal transmission time frame. A practical prepare and measure partial device-independent BB84 protocol having finite resources is presented in [155]. A security analysis performed against discretionary communication exposure from the preparation process is presented in [156]. Table 7 presents the taxonomy and summary of the finite-key analysis security schemes.

DI QKD [137] aims to fill the gap in the practical realization of the QKD without considering the working mechanism of the underlying quantum device. It requires a violation of the Bell inequality between both ends of the communication and can provide higher security than classical schemes through reduced security assumptions. Alternatively, information receivers on both ends need to identify the infringement of Bell inequality. DI attributes to the fact that there is no need to acquire information on the underlying devices. In this case, the device may correspond to adversaries. Therefore, the identification of elements is necessary as compared with considering how quantum security is implemented [157]. In this context, DI QKD is capable of defending against different kinds of security vulnerabilities including time-shift attacks [158], phase-remapping attacks [159], binding attacks [160], and wavelength-dependent attacks [161]. Additionally, security vulnerability identification generated by quantum communication channels can be defended using the technique presented in [162]. Furthermore, Broadbent et al. proposed a generalized two-mode Schrodinger cat states DI QKD protocol [163]. The taxonomy and summary of the device-independent quantum key distribution is presented in Table 8.

Table 8. Summary of countermeasures and security protocols using measurement-device-independent quantum key distribution .

Table 8. Summary of countermeasures and security protocols using measurement-device-independent quantum key distribution .